Seq2seq is a family of machine learning approaches used for natural language processing. Applications include language translation, image captioning,... 9 KB (1,077 words) - 02:31, 4 May 2024 |

he established with others from Google. He co-invented the doc2vec and seq2seq models in natural language processing. Le also initiated and lead the AutoML... 9 KB (702 words) - 16:35, 16 April 2024 |

| language models. At the time, the focus of the research was on improving Seq2seq techniques for machine translation, but even in their paper the authors... 5 KB (396 words) - 21:58, 30 April 2024 |

| approach instead of older, statistical approaches. In 2014, a 380M-parameter seq2seq model for machine translation using two Long short-term Memory (LSTMs)... 66 KB (8,256 words) - 18:24, 7 May 2024 |

"Attention Is All You Need". This paper's goal was to improve upon 2014 Seq2seq technology, and was based mainly on the attention mechanism developed by... 128 KB (11,573 words) - 07:58, 12 May 2024 |

to 1022 FLOPs) of compute which was 1.5 orders of magnitude larger than Seq2seq model of 2014 (but about 2x smaller than GPT-J-6B in 2021). Google Translate's... 20 KB (1,654 words) - 01:39, 8 May 2024 |

Machine Translation Architectures". arXiv:1703.03906 [cs.CV]. "Pytorch.org seq2seq tutorial". Retrieved December 2, 2021. Bahdanau, Dzmitry; Cho, Kyunghyun;... 28 KB (2,185 words) - 01:06, 9 May 2024 |

of Electrical Engineering and Computer Science. Vinyals co-invented the seq2seq model for machine translation along with Ilya Sutskever and Quoc Viet Le... 6 KB (413 words) - 18:43, 10 September 2023 |

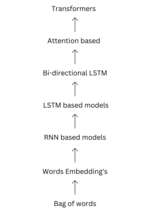

inability to capture sequential data, which later led to developments of Seq2seq approaches, which include recurrent neural networks which made use of long... 10 KB (907 words) - 10:25, 20 April 2024 |