Science and technology in the United States

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

| This article is part of a series on the |

| Science and technology of the United States of America |

|---|

|

| Timeline |

| Development |

Science and technology in the United States has a long history, producing many important figures and developments in the field. The United States of America came into being around the Age of Enlightenment (1685 to 1815), an era in Western philosophy in which writers and thinkers, rejecting the perceived superstitions of the past, instead chose to emphasize the intellectual, scientific and cultural life, centered upon the 18th century, in which reason was advocated as the primary source for legitimacy and authority. Enlightenment philosophers envisioned a "republic of science," where ideas would be exchanged freely and useful knowledge would improve the lot of all citizens.

The United States Constitution itself reflects the desire to encourage scientific creativity. It gives the United States Congress the power "to promote the progress of science and useful arts, by securing for limited times to authors and inventors the exclusive right to their respective writings and discoveries."[1] This clause formed the basis for the U.S. patent and copyright systems, whereby creators of original art and technology would get a government granted monopoly, which after a limited period would become free to all citizens, thereby enriching the public domain.[2]

Early American science

[edit]

In the early decades of its history, the United States was relatively isolated from Europe and also rather poor. At this stage, America's scientific infrastructure was still quite primitive compared to the long-established societies, institutes, and universities in Europe.

Eight of America's founding fathers were scientists of some repute. Benjamin Franklin conducted a series of experiments that deepened human understanding of electricity. Among other things, he proved what had been suspected but never before shown: that lightning is a form of electricity. Franklin also invented such conveniences as bifocal eyeglasses. Franklin also conceived the mid-room furnace, the "Franklin Stove". However, Franklin's design was flawed, in that his furnace vented the smoke from its base: because the furnace lacked a chimney to "draw" fresh air up through the central chamber, the fire would soon go out. It took David R. Rittenhouse, another hero of early Philadelphia, to improve Franklin's design by adding an L-shaped exhaust pipe that drew air through the furnace and vented its smoke up and along the ceiling, then into an intramural chimney and out of the house.[3]

Thomas Jefferson (1743–1826), was among the most influential leaders in early America; during the American Revolutionary War (1775–83), Jefferson served in the Virginia legislature, the Continental Congress, was governor of Virginia, later serving as U.S. minister to France, U.S. secretary of state, vice president under John Adams (1735–1826), writer of the Declaration of Independence and the third U.S. president. During Jefferson's two terms in office (1801–1809), the U.S. purchased the Louisiana Territory and Lewis and Clark explored the vast new acquisition.

After leaving office, he retired to his Virginia plantation, Monticello, and helped spearhead the University of Virginia.[4] Jefferson was also a student of agriculture who introduced various types of rice, olive trees, and grasses into the New World. He stressed the scientific aspect of the Lewis and Clark expedition (1804–06),[5] which explored the Pacific Northwest, and detailed, systematic information on the region's plants and animals was one of that expedition's legacies.[6]

Like Franklin and Jefferson, most American scientists of the late 18th century were involved in the struggle to win American independence and forge a new nation. These scientists included the astronomer David Rittenhouse, the medical scientist Benjamin Rush, and the natural historian Charles Willson Peale.[6]

During the American Revolution, Rittenhouse helped design the defenses of Philadelphia and built telescopes and navigation instruments for the United States' military services. After the war, Rittenhouse designed road and canal systems for the state of Pennsylvania. He later returned to studying the stars and planets and gained a worldwide reputation in that field.[6]

As United States Surgeon General, Benjamin Rush saved countless lives of soldiers during the American Revolutionary War by promoting hygiene and public health practices. By introducing new medical treatments, he made the Pennsylvania Hospital in Philadelphia an example of medical enlightenment, and after his military service, Rush established the first free clinic in the United States.[6]

Charles Willson Peale is best remembered as an artist, but he also was a natural historian, inventor, educator, and politician. He created the first major museum in the United States, the Peale Museum in Philadelphia, which housed the young nation's only collection of North American natural history specimens. Peale excavated the bones of an ancient mastodon near West Point, New York; he spent three months assembling the skeleton, and then displayed it in his museum. The Peale Museum started an American tradition of making the knowledge of science interesting and available to the general public.[6]

Science immigration

[edit]American political leaders' enthusiasm for knowledge also helped ensure a warm welcome for scientists from other countries. A notable early immigrant was the British chemist Joseph Priestley, who was driven from his homeland because of his dissenting politics. Priestley, who migrated to the United States in 1794, was the first of thousands of talented scientists drawn to the United States in search of a free, creative environment.[6]

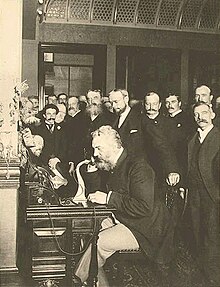

Other scientists had come to the United States to take part in the nation's rapid growth. Alexander Graham Bell, who arrived from Scotland by way of Canada in 1872, developed and patented the telephone and related inventions. Charles Proteus Steinmetz, who came from Germany in 1889, developed new alternating-current electrical systems at General Electric Company,[6] and Vladimir Zworykin, an immigrant who arrived from Russia to the States in 1919, bringing his knowledge of x-rays and cathode ray tubes and later won his first patent on a television system he invented. The Serbian Nikola Tesla went to the United States in 1884, and would later adapt the principle of the rotating magnetic field in the development of an alternating current induction motor and polyphase system for the generation, transmission, distribution and use of electrical power.[7]

Into the early 1900s, Europe remained the center of science research, notably in England and Germany. From the 1920s onwards, the tensions heralding the onset of World War II spurred sporadic but steady scientific emigration, or "brain drain", in Europe. Many of these emigrants were Jewish scientists, fearing the repercussions of anti-Semitism, especially in Germany and Italy, and sought sanctuary in the United States.[8] One of the first to do so was Albert Einstein in 1933. At his urging, and often with his support, a good percentage of Germany's theoretical physics community, previously the best in the world, left for the United States. Enrico Fermi, came from Italy in 1938, and led the work that produced the world's first self-sustaining nuclear chain reaction. Many other scientists of note moved to the U.S. during this same emigration wave, including Niels Bohr, Victor Weisskopf, Otto Stern, and Eugene Wigner.[9]

Several scientific and technological breakthroughs during the Atomic Age were the handiwork of such immigrants, who recognized the potential threats and uses of new technology. For instance, it was German professor Einstein and his Hungarian colleague, Leó Szilárd, who took the initiative and convinced President Franklin D. Roosevelt to pursue the pivotal Manhattan Project.[10] Many physicists instrumental to the project were also European immigrants, such as the Hungarian Edward Teller, "father of the hydrogen bomb,"[11] and German Nobel laureate Hans Bethe. Their scientific contributions, combined with Allied resources and facilities helped establish the United States during World War II as an unrivaled scientific juggernaut. In fact, the Manhattan Project's Operation Alsos and its components, while not designed to recruit European scientists, successfully collected and evaluated Axis military scientific research at the end of the war, especially that of the German nuclear energy project, only to conclude that it was years behind its American counterpart.[12]

When World War II ended, the United States, the United Kingdom and the Soviet Union were all intent on capitalizing on Nazi research and competed for the spoils of war. While President Harry S. Truman refused to provide sanctuary to ideologically committed members of the Nazi party, the Office of Strategic Services introduced Operation Paperclip, conducted under the Joint Intelligence Objectives Agency. This program covertly offered otherwise ineligible intellectuals and technicians whitewashed dossiers, biographies, and employment. Ex-Nazi scientists overseen by the JIOA had been employed by the U.S. military since the defeat of the Nazi regime in Project Overcast, but Operation Paperclip ventured to systematically allocate German nuclear and aerospace research and scientists to military and civilian posts, beginning in August 1945. Until the program's termination in 1990, Operation Paperclip was said to have recruited over 1,600 such employees in a variety of professions and disciplines.[13]

In the first phases of Operation Paperclip, these recruits mostly included aerospace engineers from the German V-2 combat rocket program, experts in aerospace medicine and synthetic fuels. Perhaps the most influential of these was Wernher Von Braun, who had worked on the Aggregate rockets (the first rocket program to reach outer space), and chief designer of the V-2 rocket program. Upon reaching American soil, Von Braun first worked on the United States Air Force ICBM program before his team was reassigned to NASA.[14] Often credited as “The Father of Rocket Science,” his work on the Redstone rocket and the successful deployment of the Explorer 1 satellite as a response to Sputnik 1 marked the beginning of the American Space program, and therefore, of the Space Race. Von Braun's subsequent development of the Saturn V rocket for NASA in the mid-to late sixties resulted in the first crewed landing on the Moon, the Apollo 11 mission in 1969.

In the post-war era, the U.S. was left in a position of unchallenged scientific leadership, being one of the few industrial countries not ravaged by war. Additionally, science and technology were seen to have greatly added to the Allied war victory, and were seen as absolutely crucial in the Cold War era. This enthusiasm simultaneously rejuvenated American industry, and celebrated Yankee ingenuity, instilling a zealous nationwide investment in "Big Science" and state-of-the-art government funded facilities and programs. This state patronage presented appealing careers to the intelligentsia, and further consolidated the scientific preeminence of the United States. As a result, the U.S. government became, for the first time, the largest single supporter of basic and applied scientific research. By the mid-1950s, the research facilities in the U.S. were second to none, and scientists were drawn to the U.S. for this reason alone. The changing pattern can be seen in the winners of the Nobel Prize in physics and chemistry. During the first half-century of Nobel Prizes – from 1901 to 1950 – American winners were in a distinct minority in the science categories. Since 1950, Americans have won approximately half of the Nobel Prizes awarded in the sciences.[15] See the List of Nobel laureates by country.

The American Brain Gain continued throughout the Cold War, as tensions steadily escalated in the Eastern Bloc, resulting in a steady trickle of defectors, refugees and emigrants. The partition of Germany, for one, precipitated over three and a half million East Germans – the Republikflüchtling - to cross into West Berlin by 1961. Most of them were young, well-qualified, educated professionals or skilled workers[16] - the intelligentsia - exacerbating human capital flight in the GDR to the benefit of Western countries, including the United States.

Technology inflows from abroad have played an important role in the development of the United States, especially in the late nineteenth century. A favorable U.S. security environment that allowed relatively low defense spending. High trade barriers encouraged the development of domestic manufacturing industries and the inflow of foreign technologies.[17]

American applied science

[edit]

During the 19th century, Britain, France, and Germany were at the forefront of new ideas in science and mathematics.[18][19] But if the United States lagged behind in the formulation of theory, it excelled in using theory to solve problems: applied science. This tradition had been born of necessity. Because Americans lived so far from the well-springs of Western science and manufacturing, they often had to figure out their own ways of doing things. When Americans combined theoretical knowledge with "Yankee ingenuity", the result was a flow of important inventions. The great American inventors include Robert Fulton (the steamboat); Samuel Morse (the telegraph); Eli Whitney (the cotton gin); Cyrus McCormick (the reaper); and Thomas Alva Edison, with more than a thousand inventions credited to his name. His research laboratory developed the phonograph, the first long-lasting light bulb, and the first viable movie camera.[20]

Edison was not always the first to devise a scientific application, but he was frequently the one to bring an idea to a practical finish. For example, the British engineer Joseph Swan built an incandescent electric lamp in 1860, almost 20 years before Edison. But Edison's light bulbs lasted much longer than Swan's, and they could be turned on and off individually, while Swan's bulbs could be used only in a system where several lights were turned on or off at the same time. Edison followed up his improvement of the light bulb with the development of electrical generating systems. Within 30 years, his inventions had introduced electric lighting into millions of homes.

Another landmark application of scientific ideas to practical uses was the innovation of the brothers Wilbur and Orville Wright. In the 1890s, they became fascinated with accounts of German glider experiments and began their own investigation into the principles of flight. Combining scientific knowledge and mechanical skills, the Wright brothers built and flew several gliders. Then, on December 17, 1903, they successfully flew the first sustained and controlled heavier-than-air powered flight.[21] The automobile companies of Ransom E. Olds (Oldsmobile) and Henry Ford (Ford Motor Company) popularized the assembly line in the early 20th century. The rise of fascism and Nazism in the 1920s and 30s led many European scientists, such as Albert Einstein, Enrico Fermi, and John von Neumann, to immigrate to the United States.[22]

An American invention that was barely noticed in 1947 went on to usher in the Information Age. In that year John Bardeen, William Shockley, and Walter Brattain of Bell Laboratories drew upon highly sophisticated principles of quantum physics to invent the transistor, a key component in almost all modern electronics, which led to the development of microprocessors, software, personal computers, and the Internet.[23] As a result, book-sized computers of today can outperform room-sized computers of the 1960s, and there has been a revolution in the way people live – in how they work, study, conduct business, and engage in research. World War II had a profound impact on the development of science and technology in the United States. Before World War II, the federal government basically did not assume responsibility for supporting scientific development. During the war, the federal government and science formed a new cooperative relationship. After the war, the federal government became the main role in supporting science and technology. And in the following years, the federal government supported the establishment of a national modern science and technology system, making America a world leader in science and technology.[24]

Part of America's past and current preeminence in applied science has been due to its vast research and development budget, which at $401.6bn in 2009 was more than double that of China's $154.1bn and over 25% greater than the European Union's $297.9bn.[25]

The Atomic Age and "Big Science"

[edit]

One of the most spectacular – and controversial – accomplishments of US technology has been the harnessing of nuclear energy. The concepts that led to the splitting of the atom were developed by the scientists of many countries, but the conversion of these ideas into the reality of nuclear fission was accomplished in the United States in the early 1940s, both by many Americans but also aided tremendously by the influx of European intellectuals fleeing the growing conflagration sparked by Adolf Hitler and Benito Mussolini in Europe.

During these crucial years, a number of the most prominent European scientists, especially physicists, immigrated to the United States, where they would do much of their most important work; these included Hans Bethe, Albert Einstein, Enrico Fermi, Leó Szilárd, Edward Teller, Felix Bloch, Emilio Segrè, John von Neumann, and Eugene Wigner, among many, many others. American academics worked hard to find positions at laboratories and universities for their European colleagues.

After German physicists split a uranium nucleus in 1938, a number of scientists concluded that a nuclear chain reaction was feasible and possible. The Einstein–Szilárd letter to President Franklin D. Roosevelt warned that this breakthrough would permit the construction of "extremely powerful bombs." This warning inspired an executive order towards the investigation of using uranium as a weapon, which later was superseded during World War II by the Manhattan Project the full Allied effort to be the first to build an atomic bomb. The project bore fruit when the first such bomb was exploded in New Mexico on July 16, 1945.

The development of the bomb and its use against Japan in August 1945 initiated the Atomic Age, a time of anxiety over weapons of mass destruction that has lasted through the Cold War and down to the anti-proliferation efforts of today. Even so, the Atomic Age has also been characterized by peaceful uses of nuclear power, as in the advances in nuclear power and nuclear medicine.

Along with the production of the atomic bomb, World War II also began an era known as "Big Science" with increased government patronage of scientific research. The advantage of a scientifically and technologically sophisticated country became all too apparent during wartime, and in the ideological Cold War to follow the importance of scientific strength in even peacetime applications became too much for the government to any more leave to philanthropy and private industry alone. This increased expenditure on scientific research and education propelled the United States to the forefront of the international scientific community—an amazing feat for a country which only a few decades before still had to send its most promising students to Europe for extensive scientific education.

The first US commercial nuclear power plant started operation in Illinois in 1956. At the time, the future for nuclear energy in the United States looked bright. But opponents criticized the safety of power plants and questioned whether safe disposal of nuclear waste could be assured. A 1979 accident at Three Mile Island in Pennsylvania turned many Americans against nuclear power. The cost of building a nuclear power plant escalated, and other, more economical sources of power began to look more appealing. During the 1970s and 1980s, plans for several nuclear plants were cancelled, and the future of nuclear power remains in a state of uncertainty in the United States.

Meanwhile, American scientists have been experimenting with other renewable energy, including solar power. Although solar power generation is still not economical in much of the United States, recent developments might make it more affordable.

Telecom and technology

[edit]

For the past 80 years, the United States has been integral in fundamental advances in telecommunications and technology. For example, AT&T's Bell Laboratories spearheaded the American technological revolution with a series of inventions including the first practical light emitted diode (LED), the transistor, the C programming language, and the Unix computer operating system.[26] SRI International and Xerox PARC in Silicon Valley helped give birth to the personal computer industry, while ARPA and NASA funded the development of the ARPANET and the Internet.[27]

Herman Hollerith was just a twenty-year-old engineer when he realized the need for a better way for the U.S. government to conduct their Census and then proceeded to develop electromechanical tabulators for that purpose. The net effect of the many changes from the 1880 census: the larger population, the data items to be collected, the Census Bureau headcount, the scheduled publications, and the use of Hollerith's electromechanical tabulators, was to reduce the time required to process the census from eight years for the 1880 census to six years for the 1890 census.[28] That kick started The Tabulating Machine Company. By the 1960s, the company name had been changed to International Business Machines, and IBM dominated business computing.[29] IBM revolutionized the industry by bringing out the first comprehensive family of computers (the System/360). It caused many of their competitors to either merge or go bankrupt, leaving IBM in an even more dominant position.[30] IBM is known for its many inventions like the floppy disk, introduced in 1971, supermarket checkout products, and introduced in 1973, the IBM 3614 Consumer Transaction Facility, an early form of today's Automatic Teller Machines.[31]

In 1983, the DynaTAC 8000x was the first commercially available handheld mobile phone. From 1983 to 2014, worldwide mobile phone subscriptions grew to over seven billion; enough to provide one for every person on Earth.[32]

The Space Age

[edit]

During the Cold War, competition for superior missile capability led to the Space Race between the United States and Soviet Union.[33][34] American Robert Goddard was one of the first scientists to experiment with rocket propulsion systems. In his small laboratory in Worcester, Massachusetts, Goddard worked with liquid oxygen and gasoline to propel rockets into the atmosphere, and in 1926 successfully fired the world's first liquid-fuel rocket which reached a height of 12.5 meters.[35] Over the next 10 years, Goddard's rockets achieved modest altitudes of nearly two kilometers, and interest in rocketry increased in the United States, Britain, Germany, and the Soviet Union.[36]

As Allied forces advanced during World War II, both the American and Russian forces searched for top German scientists who could be claimed as spoils for their country. The American effort to bring home German rocket technology in Operation Paperclip, and the bringing of German rocket scientist Wernher von Braun (who would later sit at the head of a NASA center) stand out in particular.

Expendable rockets provided the means for launching artificial satellites, as well as crewed spacecraft. In 1957, the Soviet Union launched the first satellite, Sputnik 1, and the United States followed with Explorer 1 in 1958. The first human spaceflights were made in early 1961, first by Soviet cosmonaut Yuri Gagarin and then by American astronaut Alan Shepard.

From those first tentative steps, to the Apollo 11 landing on the Moon and the partially reusable Space Shuttle, the American space program brought forth a breathtaking display of applied science. Communications satellites transmit computer data, telephone calls, and radio and television broadcasts. Weather satellites furnish the data necessary to provide early warnings of severe storms. The United States also developed the Global Positioning System (GPS), the world's pre-eminent satellite navigation system.[37] Interplanetary probes and space telescopes began a golden age of planetary science and advanced a wide variety of astronomical work.

On April 20, 2021, MOXIE produced oxygen from Martian atmospheric carbon dioxide using solid oxide electrolysis, the first experimental extraction of a natural resource from another planet for human use.[38] In 2023, the United States ranked 3rd in the Global Innovation Index.[39]

Medicine and health care

[edit]

As in physics and chemistry, Americans have dominated the Nobel Prize for physiology or medicine since World War II. The private sector has been the focal point for biomedical research in the United States, and has played a key role in this achievement.

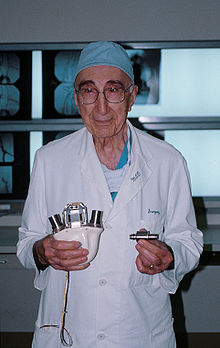

Maurice Hilleman, a well-known American virologist, is remembered for having developed more than 40 vaccines, thus creating an important record in the field of medicine. He was responsible for his contribution to the creation of vaccines against the Asian flu of 1957, which broke out in Guizhou, and the Hong Kong flu of 1968, contributing to the preparation and distribution of the vaccine doses that saved the world. He was responsible for the creation of vaccines against measles, mumps, hepatitis A, hepatitis B, chickenpox, Neisseria meningitidis, Streptococcus pneumoniae and Haemophilus influenzae. He was awarded the Distinguished Service Medal by the US Army for his important work.[1].

As of 2000, for-profit industry funded 57%, non-profit private organizations such as the Howard Hughes Medical Institute funded 7%, and the tax-funded National Institutes of Health (NIH) funded 36% of medical research in the United States.[40] However, by 2003, the NIH funded only 28% of medical research funding; funding by private industry increased 102% from 1994 to 2003.[41]

The NIH consists of 24 separate institutes in Bethesda, Maryland. The goal of NIH research is knowledge that helps prevent, detect, diagnose, and treat disease and disability. At any given time, grants from the NIH support the research of about 35,000 principal investigators. Five Nobel Prize-winners have made their prize-winning discoveries in NIH laboratories.

NIH research has helped make possible numerous medical achievements. For example, mortality from heart disease, the number-one killer in the United States, dropped 41 percent between 1971 and 1991. The death rate for strokes decreased by 59 percent during the same period. Between 1991 and 1995, the cancer death rate fell by nearly 3 percent, the first sustained decline since national record-keeping began in the 1930s. And today more than 70 percent of children who get cancer are cured.

With the help of the NIH, molecular genetics and genomics research have revolutionized biomedical science. In the 1980s and 1990s, researchers performed the first trial of gene therapy in humans and are now able to locate, identify, and describe the function of many genes in the human genome.

Research conducted by universities, hospitals, and corporations also contributes to improvement in diagnosis and treatment of disease. NIH funded the basic research on Acquired Immune Deficiency Syndrome (AIDS), for example, but many of the drugs used to treat the disease have emerged from the laboratories of the American pharmaceutical industry; those drugs are being tested in research centers across the country.

See also

[edit]- Science policy of the United States

- Biomedical research in the United States

- Technological and industrial history of the United States

- Timeline of United States inventions

- Timeline of United States discoveries

- National Inventors Hall of Fame

- United States Patent and Trademark Office

- NASA spinoff technologies

- Yankee ingenuity

References

[edit]- ^ "Guide to the Constitution".

- ^ "A Brief History of U.S. Patent Law".

- ^ "Lemelson-MIT Program". Archived from the original on February 19, 2003.

- ^ "Thomas Jefferson, a Brief Biography - Thomas Jefferson's Monticello".

- ^ "Thomas Jefferson and the Lewis and Clark Expedition - Thomas Jefferson's Monticello".

- ^ a b c d e f g "American Spaces – Connecting YOU with U.S."

- ^ "Tesla's Biography".

- ^ Angelo, Joseph A.. Nuclear Technology (Westport: Greenwood, 2004), 17

- ^ Isaacson, Walter. Einstein: His Life and His Universe (New York: Simon & Schuster, 2007), 407

- ^ Isaacson, Walter. Einstein: His Life and His Universe (New York: Simon & Schuster, 2007), 473

- ^ Teller, Edward. Memoirs: A Twentieth Century Journey in Science and Politics (Cambridge: Perseus, 2001), 546

- ^ Gimbel, John. “German Scientists, United States Denazification Policy, and the 'Paperclip Conspiracy,'” The International History Review, Vol. 12, No. 3 (Aug., 1990): 448-449.

- ^ Hunt, Linda. Secret Agenda: The United States Government, Nazi Scientists, and Project Paperclip, 1945 to 1990 (New York: St.Martin's Press, 1991).

- ^ Ward, Bob. Doctor Space (Annapolis: Naval Institute Press, 2005), 83

- ^ Kay, Alison J.. What If There's Nothing Wrong? (BalboaPress November 27, 2012)

- ^ Dowty, Alan. Closed Borders: The Contemporary Assault on Freedom of Movement (Binghampton: Twentieth Century Fund, 1987.), 122.

- ^ National Research Council (U.S.). (1997). Maximizing U.S. Interests in Science and Technology Relations with Japan: Committee on Japan Framework Statement and Report of the Competitiveness Task Force. Washington, D.C.: National Academies Press.

- ^ Walker, William (1993). "National Innovation Systems: Britain". In Nelson, Richard R. (ed.). National innovation systems : a comparative analysis. New York: Oxford University Press. pp. 61–4. ISBN 0195076176.

- ^ Ulrich Wengenroth (2000). "Science, Technology, and Industry in the 19th Century" (PDF). Munich Centre for the History of Science and Technology. Retrieved June 13, 2016.

{{cite journal}}: Cite journal requires|journal=(help) - ^ "Thomas Edison's Most Famous Inventions". Thomas A Edison Innovation Foundation. Archived from the original on March 16, 2016. Retrieved January 21, 2015.

- ^ Benedetti, François (December 17, 2003). "100 Years Ago, the Dream of Icarus Became Reality". Fédération Aéronautique Internationale (FAI). Archived from the original on September 12, 2007. Retrieved August 15, 2007.

- ^ Fraser, Gordon (2012). The Quantum Exodus: Jewish Fugitives, the Atomic Bomb, and the Holocaust. New York: Oxford University Press. ISBN 978-0-19-959215-9.

- ^ Sawyer, Robert Keith (2012). Explaining Creativity: The Science of Human Innovation. Oxford University Press. p. 256. ISBN 978-0-19-973757-4.

- ^ Mowery, D. C., & Rosenberg, N. (1998). Paths of Innovation: Technological Change in 20th-Century America. Cambridge, UK: Cambridge University Press.

- ^ The Sources and Uses of U.S. Science Funding.

- ^ "AT&T Labs Fosters Innovative Technology - AT&T Labs".

- ^ "012 ARPA History".

- ^ Report of the Commissioner of Labor In Charge of The Eleventh Census to the Secretary of the Interior for the Fiscal Year Ending June 30, 1895 Washington, D.C., July 29 1895 Page 9: You may confidently look for the rapid reduction of the force of this office after the 1st of October, and the entire cessation of clerical work during the present calendar year. ... The condition of the work of the Census Division and the condition of the final reports show clearly that the work of the Eleventh Census will be completed at least two years earlier than was the work of the Tenth Census. Carroll D. Wright Commissioner of Labor in Charge.

- ^ "The Future of the Internet—And How to Stop It » Chapter 1: Battle of the Boxes". Archived from the original on April 2, 2014. Retrieved April 2, 2014.

- ^ "Computer History Museum - International Business Machines Corporation (IBM) - The Entire Concept of Computers Has Changed...IBM System/360".

- ^ "IBM - Archives - History of IBM - 1970 - United States". January 23, 2003. Archived from the original on December 16, 2004.

- ^ "Mobile penetration". July 9, 2010.

Almost 40 percent of the world's population, 2.7 billion people, are online. The developing world is home to about 826 million female internet users and 980 million male internet users. The developed world is home to about 475 million female Internet users and 483 million male Internet users.

- ^ 10 Little Americans. Lulu.com. ISBN 978-0-615-14052-0. Retrieved September 15, 2014 – via Google Books.

- ^ "NASA's Apollo technology has changed the history". Sharon Gaudin. July 20, 2009. Retrieved September 15, 2014.

- ^ "Spaceline: History of Rocketry : Goddard".

- ^ "American Spaces – Connecting YOU with U.S."

- ^ Ngak, Chenda (July 4, 2012). "Made in the USA: American tech inventions". CBS News.

- ^ Hecht, M.; Hoffman, J.; Rapp, D.; McClean, J.; SooHoo, J.; Schaefer, R.; Aboobaker, A.; Mellstrom, J.; Hartvigsen, J.; Meyen, F.; Hinterman, E. (January 6, 2021). "Mars Oxygen ISRU Experiment (MOXIE)". Space Science Reviews. 217 (1): 9. Bibcode:2021SSRv..217....9H. doi:10.1007/s11214-020-00782-8. hdl:1721.1/131816.2. ISSN 1572-9672. S2CID 106398698.

- ^ WIPO. "Global Innovation Index 2023, 15th Edition". www.wipo.int. doi:10.34667/tind.46596. Retrieved October 17, 2023.

- ^ The Benefits of Medical Research and the Role of the NIH.

- ^ Medical Research Spending Doubled Over Past Decade, Neil Osterweil, MedPage Today, September 20, 2005

French

French Deutsch

Deutsch