Mamba is a deep learning architecture focused on sequence modeling. It was developed by researchers from Carnegie Mellon University and Princeton University...

12 KB (1,254 words) - 10:13, 25 April 2024

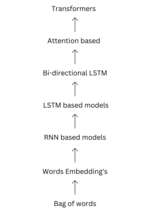

A transformer is a deep learning architecture developed by Google and based on the multi-head attention mechanism, proposed in a 2017 paper "Attention...

65 KB (8,115 words) - 17:00, 10 June 2024

billion Architecture: Hybrid Mamba (SSM) Transformer using Mixture of Experts (MoE) Mamba - deep learning architecture Mixture of experts - deep learning technique...

4 KB (328 words) - 02:46, 4 April 2024

Deep reinforcement learning (deep RL) is a subfield of machine learning that combines reinforcement learning (RL) and deep learning. RL considers the...

27 KB (2,935 words) - 06:52, 1 June 2024

Mamba (deep learning), a deep learning architecture Mamba (website), a Russian social dating website Mamba (roller coaster), in Missouri, US Mamba (surname)...

1 KB (215 words) - 01:05, 13 January 2024

Q-learning algorithm. In 2014, Google DeepMind patented an application of Q-learning to deep learning, titled "deep reinforcement learning" or "deep Q-learning"...

29 KB (3,785 words) - 06:23, 6 April 2024

Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs...

56 KB (6,584 words) - 15:33, 24 May 2024

for training multilayer perceptrons (MLPs) by deep learning were already known. The first deep learning MLP was published by Alexey Grigorevich Ivakhnenko...

62 KB (6,436 words) - 21:11, 12 June 2024

Multimodal learning, in the context of machine learning, is a type of deep learning using multiple modalities of data, such as text, audio, or images....

7 KB (1,697 words) - 14:24, 1 June 2024

original on 2019-07-10. Retrieved 2015-09-04. Yoshua Bengio (2009). Learning Deep Architectures for AI. Now Publishers Inc. pp. 1–3. ISBN 978-1-60198-294-0....

134 KB (14,693 words) - 09:27, 11 June 2024